Artificial intelligence offers clear benefits: it can reduce human error, save time through automation, provide digital assistance, and support fact-based decision-making. However, awareness is growing around its potential misuse. Some of the associated risks include increasing dependency on technology (reducing critical thinking), propagating biases when trained on narrow datasets, and imposing environmental costs because training and large-scale inference require energy and water-intensive computation.

Data privacy and security are also major concerns. Insecure AI deployments risk exposing sensitive data and, according to IBM, only 24% of GenAI initiatives are secure.

This report focuses on one of the most urgent risks: how bad actors exploit AI to launch and scale cyber attacks.

Because AI is widely accessible, attackers can use it to clone voices, fabricate identities, and generate highly convincing phishing content - all aimed at scamming, stealing identities, or compromising privacy and security. AI also enables attackers to automate and scale their efforts, dramatically increasing their reach while reducing the effort required.

According to the TM Threat Intelligence team, since 2020, there have been at least 350 cyber attacks related to artificial intelligence, with 100 in 2025 alone.

How AI Is Being Abused

Phishing and Impersonation

AI helps craft phishing emails that closely mimic trusted contacts. Instead of generic, obviously fraudulent messages, modern attacks analyse publicly available data (social posts, prior emails, professional profiles) to mirror an individual’s writing style and insert plausible personal details. An email that casually references your recent holiday or a deadline you posted about feels legitimate and is far more likely to deceive the recipient.

Attackers are no longer limited to text; they now produce realistic voice and video messages as well. These hyper-realistic audio and visual forgeries, commonly known as deepfakes, can impersonate executives or public figures, enabling high-value fraud such as business email compromise (BEC), extortion, and social manipulation.

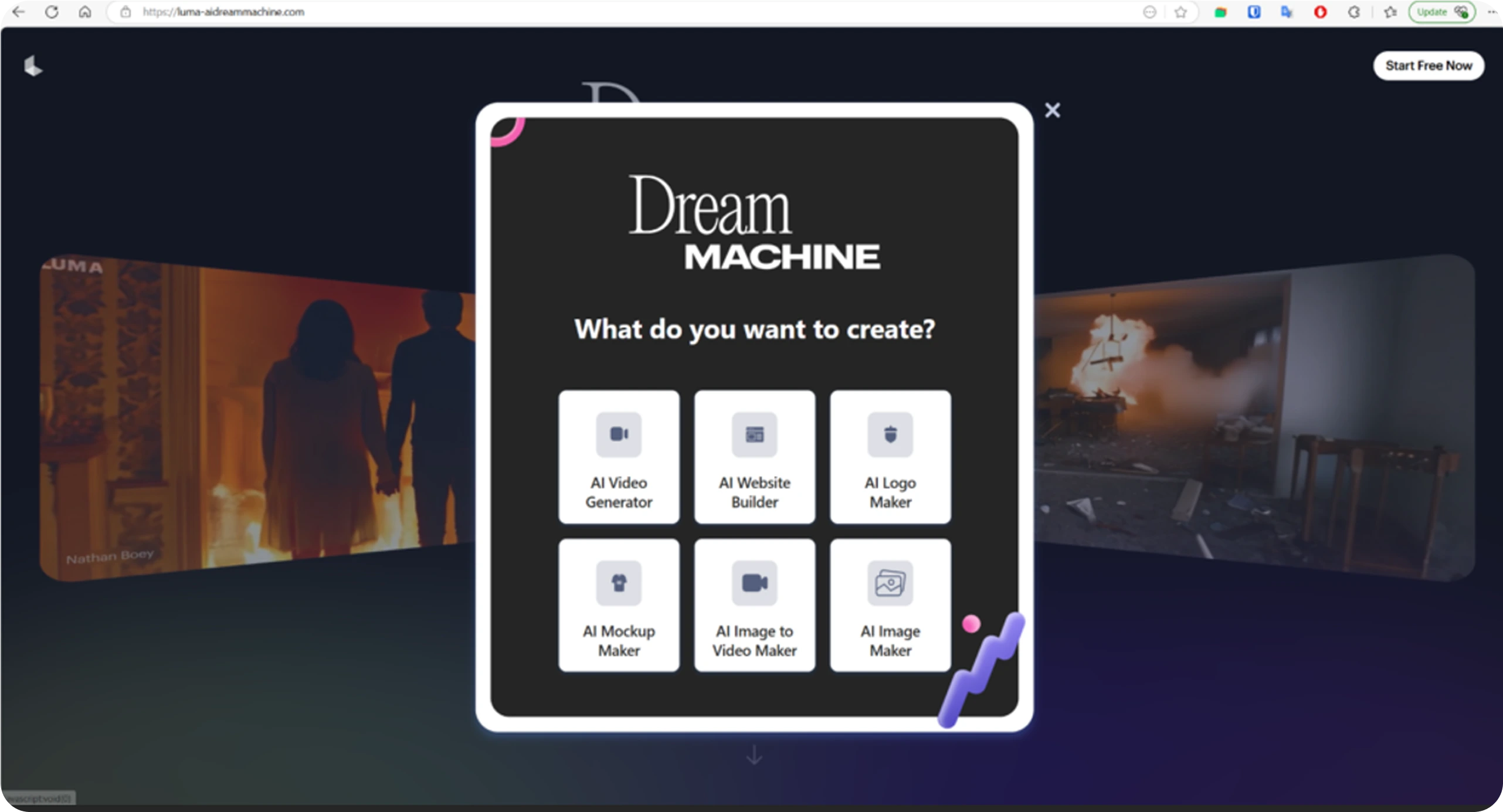

This campaign is especially dangerous not only because it tricks people into risking their money and personal data, but also because attackers are exploiting the popularity of AI platforms to spread malware.

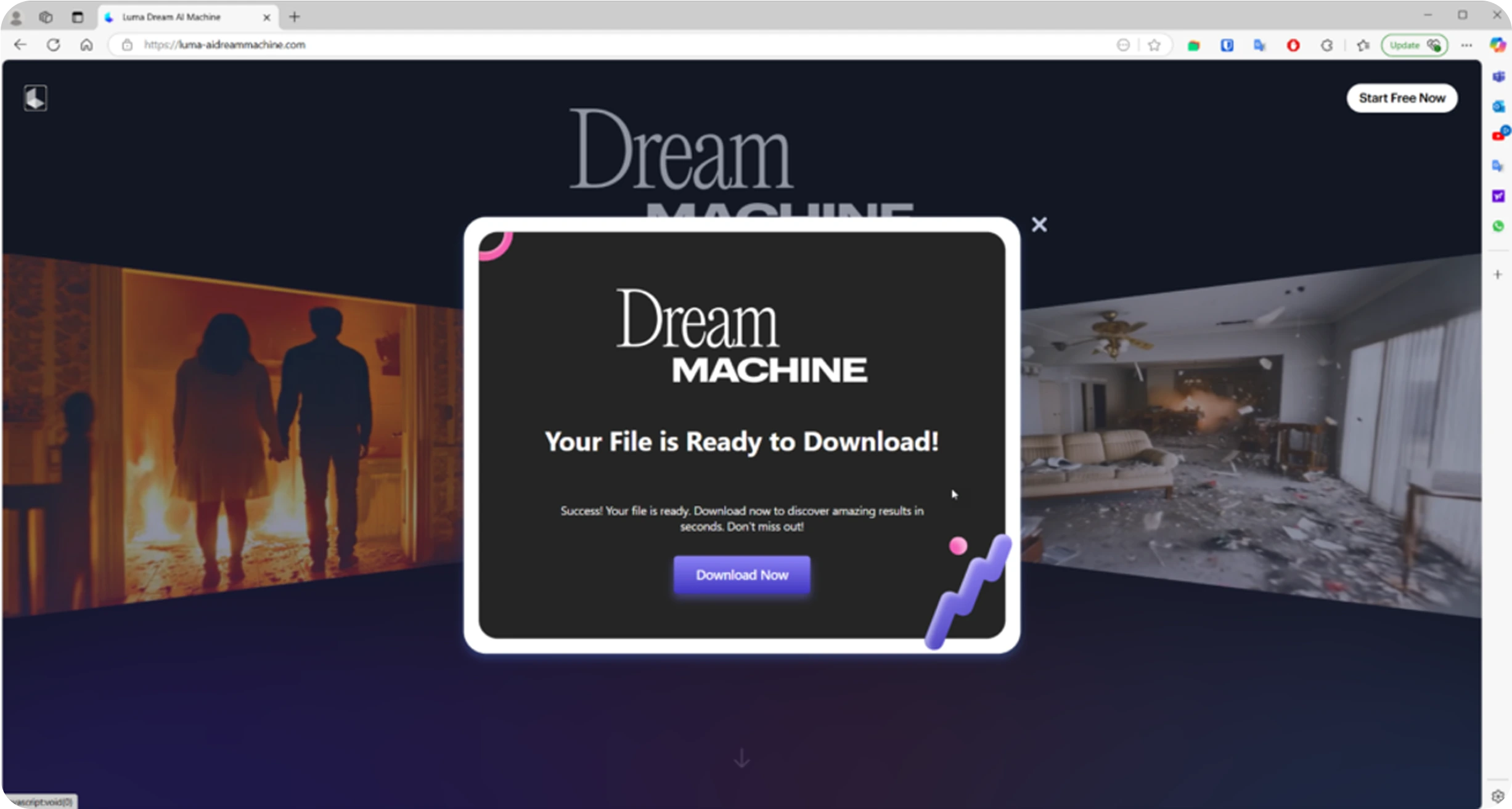

Researchers have uncovered new stealer malware named Noodlophile, which is distributed through fake AI video-generation websites. These sites claim to generate videos from uploaded images but instead deliver malicious files disguised as downloads.

In a significant shift, cyber criminals are weaponising public enthusiasm for AI. Rather than relying on traditional phishing or pirated software, they build convincing AI-themed platforms and promote them aggressively through Facebook groups and viral social media campaigns. Some posts have reached more than 62,000 views, drawing in users eager for free AI tools. Evidence suggests the malware is being used as part of a malware-as-a-service (MaaS) scheme. Within these groups, users are encouraged to click on links that redirect them to fraudulent websites falsely claiming to offer AI-powered content creation services.

|  |

Once on the fake site, users are prompted to upload their images or videos, under the impression that they are using genuine AI to generate or edit content.

|

Once installed, Noodlophile can steal sensitive data such as browser passwords, cookies, crypto-wallets, and online account tokens. In some cases, it also installs additional remote-access malware that gives attackers deeper control of the victim’s device. Stolen data is sent back to the attackers through Telegram channels, which they use as a covert communication method.

It is recommended to avoid unknown AI tool downloads, train users to spot fake offers, and block the domains and files linked to this campaign.

Social Engineering

A social engineering attack is any cyber attack that manipulates human behaviour to fulfil a purpose, such as sharing sensitive data, transferring money or ownership of high-value items, or granting access to a system, application, database, or device.

AI can rapidly harvest and fuse scraps of data from across the internet (LinkedIn job titles, public social posts, location tags, etc.) to produce detailed target profiles. With this intelligence, attackers can then craft highly targeted social-engineering scenarios - for example, emails that appear to come from an organisation’s IT team requesting a “password reset”. This robotic reconnaissance makes attacks faster, more personalised, and harder to detect.

In practical terms, AI-driven social engineering can be used to:

- Identify an ideal target inside an organisation (i.e. a person who can grant access or approve transactions).

- Build a convincing profile and online presence for communications.

- Design plausible scenarios to capture attention.

- Produce personalised messages and multimedia (audio/video) to engage the target.

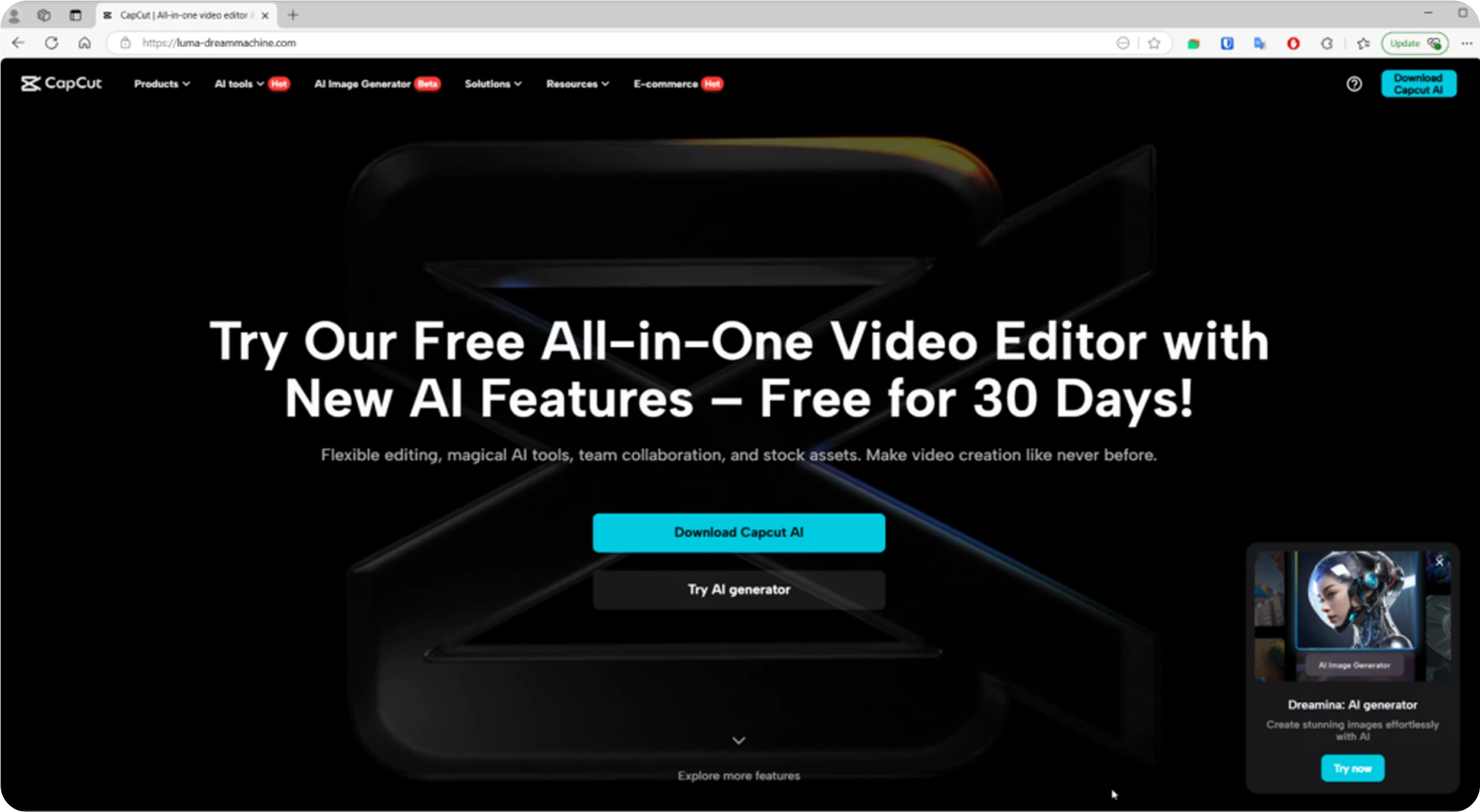

Since 2023, threat actors have increasingly taken advantage of AI chatbots and related tools. The rapid adoption of tools such as ChatGPT (launched in 2022 and quickly reaching millions of users) created an attractive lure for victims.

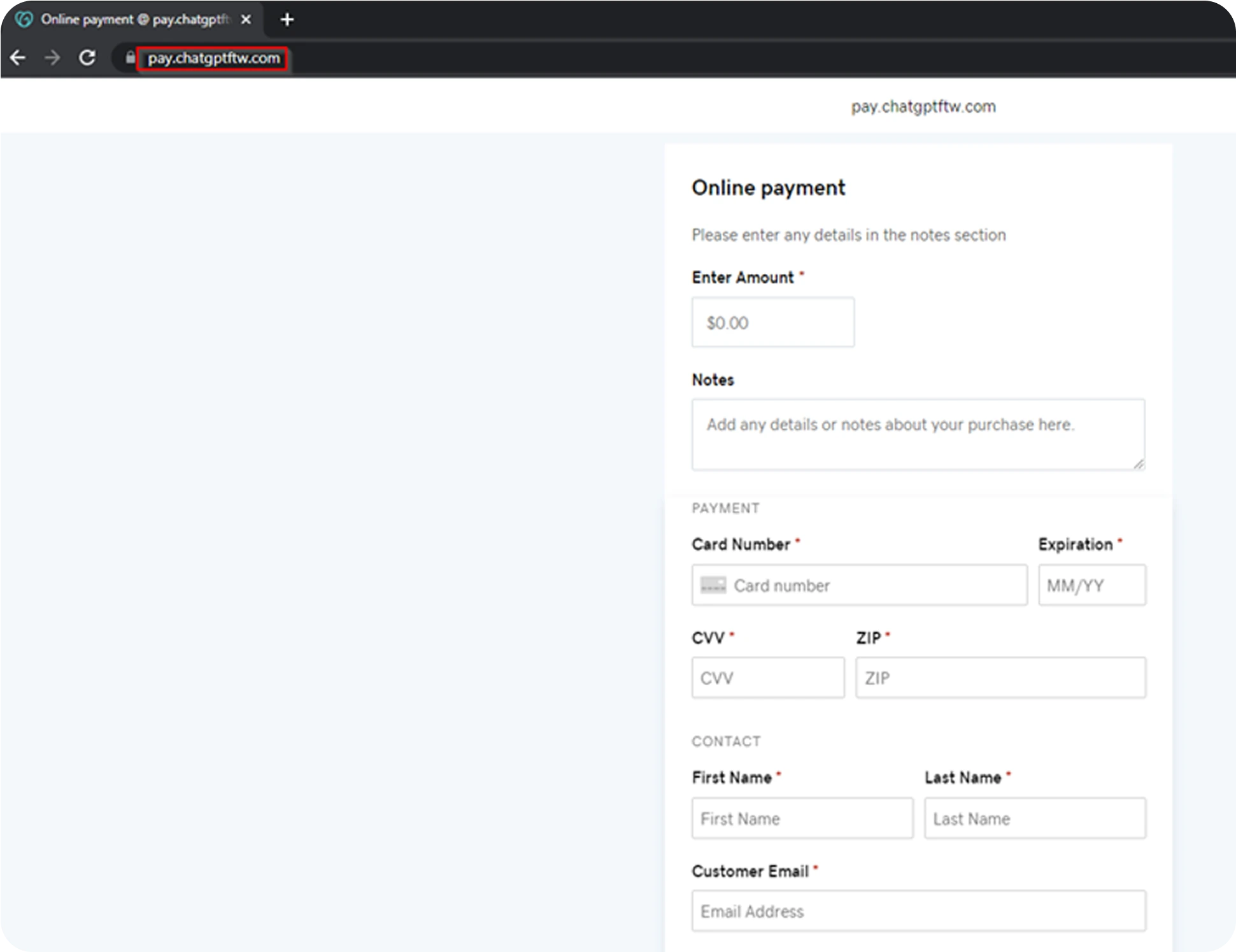

A report from CRIL, a member of the Confederation of Laboratories for Artificial Intelligence Research in Europe, observed multiple phishing campaigns using fake OpenAI/ChatGPT pages promoted via fraudulent social accounts. Some of these sites host stealers or other malware, while others impersonate payment pages to harvest credit card data.

One unofficial ChatGPT social page identified by CRIL combined benign-looking posts and videos with links that redirected users to phishing pages mimicking ChatGPT. Beyond malware distribution, attackers also use AI-themed lures for financial fraud, for example, fake ChatGPT payment pages designed to steal money and card details.

|  |

Equally concerning is that AI models are being leveraged to generate sophisticated attack scripts and tooling, accelerating the evolution of malware and enabling more complex, automated attack chains - a trend discussed further below.

Malware Evolution

AI is also changing malware development. Attackers can design programmes that adapt or “mutate” their code to avoid detection, undermining defences that rely on static signatures or known patterns. This adaptive behaviour allows malicious software to persist longer in environments, quietly exfiltrating data, spying, or disrupting operations while traditional antivirus tools struggle to keep up.

Research suggests that by 2026, AI-powered malware will become a standard tool for cyber criminals. The antivirus industry already struggles when a malicious programme’s disguise keeps shifting; that persistence enables longer dwell time, more theft, and greater operational impact.

A clear early example is PromptLock, uncovered by ESET in August 2025. PromptLock is a proof-of-concept ransomware that uses a locally hosted large language model (GPT-OSS-20B via the Ollama API) to dynamically generate Lua scripts for file discovery, inspection, exfiltration, and encryption. Written in Go, it has Windows and Linux variants uploaded to VirusTotal. Although it appears to be a proof of concept rather than an actively deployed threat, its design highlights the danger of embedding AI in malware. Local script generation avoids remote AI calls and makes detection harder.

More broadly, AI is lowering the skill floor for cyber crime. Prebuilt toolkits, prompt engineering, and AI-enabled marketplaces allow inexperienced actors to perform attacks that previously required specialist training. As a result, attacks are becoming faster, more personalised, and more persistent.

A lone fraudster can now produce spear-phishing messages that mimic state-quality campaigns or create deepfakes that once required expensive resources. That perceived sophistication can cause misattribution: defenders may assume an advanced persistent threat (APT) is responsible when, in fact, a low-skill actor used off-the-shelf AI tooling - potentially wasting resources and causing strategic errors.

OpenAI has confirmed that threat actors are using ChatGPT to assist with malware development. While these cases have not introduced fundamentally new capabilities, they demonstrate how generative AI can accelerate offensive workflows, code generation, scripting help, debugging, and partial automation across the malware lifecycle.

OpenAI reported disrupting over 20 malicious operations tied to AI misuse, banning accounts and sharing infrastructure indicators with cyber security partners. Their mitigation steps include restricting access, improving detection, and collaborating with threat intelligence communities.

Common malicious uses of large language models (LLMs) observed so far map to typical attack stages:

- LLM-informed reconnaissance: asking how to find vulnerable versions (e.g., Log4j) or how to target specific infrastructure.

- LLM-assisted vulnerability research: requesting steps to use tools such as SQLmap or exploit RCEs.

- LLM-supported social engineering: generating tailored phishing lures, job messages, or themes that increase engagement.

- LLM-assisted post-compromise activity: asking how to extract macOS passwords or perform lateral movement.

OpenAI and other vendors are taking steps to curb misuse, but defenders must also adapt. Practical changes include deploying behavioural detection (rather than purely signature-based tools), enforcing strong multi-factor and phishing-resistant authentication, restricting software installations, hardening CI/CD and build environments, and training staff to recognise sophisticated social engineering and deepfake content.

Organisations must therefore recognise that AI is not just another emerging technology - it is a transformative force that is levelling the playing field for adversaries. The ability of attackers to weaponise AI with few restrictions demands a proactive, multi-layered response that combines technology, policy, and human resilience.

To Mitigate AI-Enabled Threats, Organisations Should:

- Strengthen technical defences: Enforce multi-factor authentication, implement strict email security controls, and deploy behaviour-based threat detection to counter polymorphic malware.

- Harden processes: Establish out-of-band verification procedures for sensitive requests (e.g., financial transfers, password resets) and regularly test incident response plans for deepfake and AI-driven attacks.

- Enhance human awareness: Train employees and executives to recognise advanced-looking but potentially low-skill attacks, with a focus on verifying authenticity over assuming legitimacy.

- Monitor and share intelligence: Participate in industry threat-sharing networks to stay ahead of emerging AI-enabled tactics, techniques, and procedures (TTPs).

- Adopt long-term resilience strategies: Explore media verification tools and partnerships that focus on content provenance and authenticity.